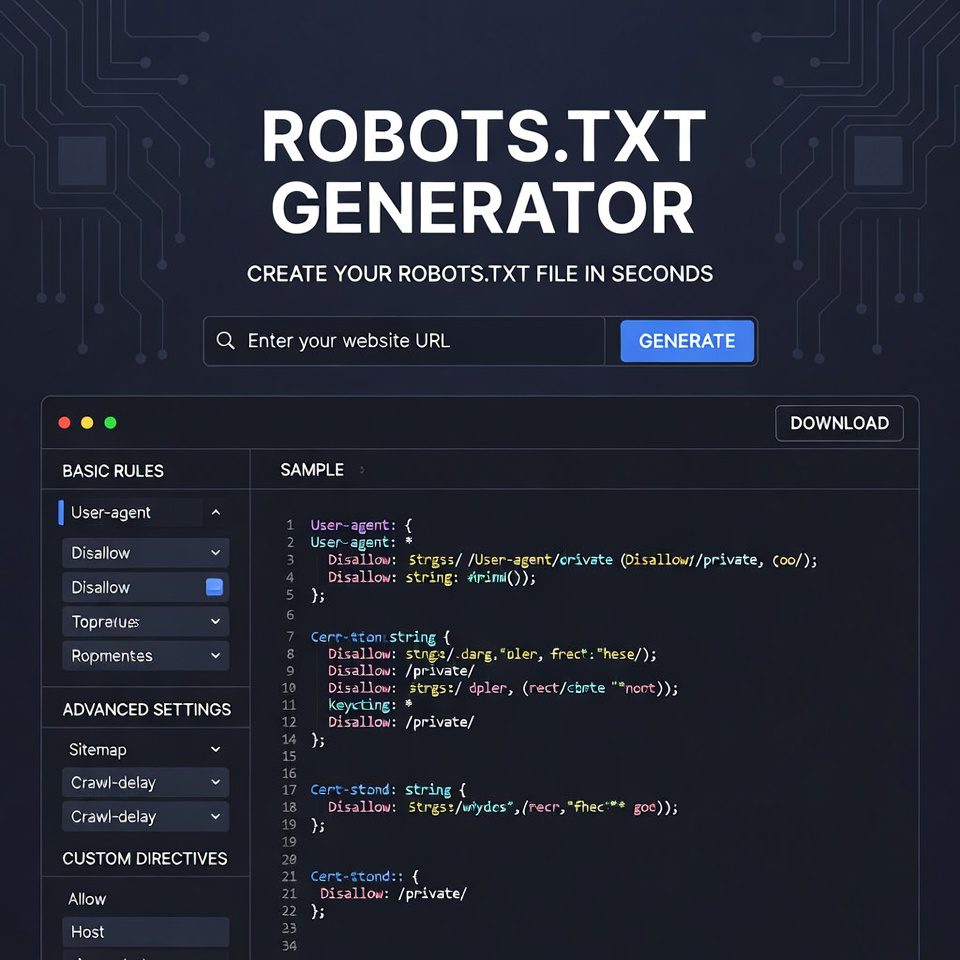

Robots.txt Generator

Create a customized robots.txt file to control how search engines crawl and index your website. A properly configured robots.txt file helps search engines understand which parts of your site should be crawled and which should be ignored.

How to use: Fill in the fields below to generate a robots.txt file tailored to your website’s needs. Once generated, copy the code and save it as “robots.txt” in your website’s root directory.

Specify paths you want to block search engines from crawling:

Specify paths you explicitly want to allow (overrides Disallow rules):

Specify how many seconds search engines should wait between requests (not supported by all search engines):

Your Robots.txt Code:

Robots.txt Generator | Create robots.txt Files Online

Generate custom robots.txt files for your website instantly. Our free robots.txt generator helps control search engine crawling and improve your SEO strategy.

Introduction

Want to control which parts of your website search engines can crawl? Need to prevent sensitive pages from appearing in search results? The robots.txt file is your website’s traffic director for search engine bots. A robots.txt generator simplifies creating this crucial file without technical expertise. This guide will explain robots.txt fundamentals and show how our free tool helps you manage search engine access effectively.

What is a Robots.txt Generator?

A robots.txt generator is an online tool that creates a robots.txt file specifying which areas of your website search engines should or shouldn’t crawl. It uses standard directives that all major search engines understand.

Our professional generator creates:

- Standard-compliant robots.txt files

- Custom rules for specific search engines

- Allow/disallow directives for precise control

- Sitemap references for better indexing

- Crawl delay settings to manage server load

Why Robots.txt Generator Files are Essential

Proper robots.txt configuration directly impacts your SEO performance and website security.

1. Crawl Budget Optimization

Guide search engines to prioritize your most important pages, ensuring they get indexed quickly.

2. Private Content Protection

Keep sensitive areas like admin pages, staging sites, and private content out of search results.

3. Duplicate Content Prevention

Block search engines from crawling printer-friendly pages, session IDs, and other duplicate content sources.

4. Server Resource Management

Control crawl rates to prevent overwhelming your server during peak traffic periods.

Key Features of Our Robots.txt Generator

Our tool provides enterprise-level robots.txt creation with these user-friendly features.

- Visual Rule Builder: Create rules without learning syntax

- Search Engine Specificity: Set different rules for Google, Bing, and others

- Syntax Validation: Automatic error checking before generation

- Download Ready: Get your properly formatted file instantly

- Expert Templates: Start with proven configurations for common website types

Common Robots.txt Generator Scenarios

Here are practical situations where our generator provides immediate solutions.

E-commerce Websites

- Allow crawling of product pages and categories

- Disallow crawling of shopping cart and checkout pages

- Block search engines from duplicate content like sort parameters

Blog and Content Sites

- Ensure all article pages get crawled

- Disallow admin and login pages

- Block search from tag pages if they create duplicate content

Development Sites

- Completely block search engines from staging environments

- Prevent accidental indexing of test content

- Allow specific bots for SEO testing purposes

How to Generate a Robots.txt Generator File

Creating your custom robots.txt file takes just three simple steps.

- Select Your Rules: Choose which pages to allow or disallow

- Configure Settings: Set crawl delays and sitemap location

- Download File: Get your ready-to-use robots.txt file

Robots.txt Generator Best Practices

Follow these guidelines for effective search engine control.

Place in Root Directory

Always put your robots.txt file in your website’s main directory (example.com/robots.txt).

Keep It Simple

Only use directives you actually need. Overly complex files can cause unintended blocking.

Test Thoroughly

Use Google Search Console’s robots.txt tester to verify your rules work as intended.

Update Regularly

Review and update your robots.txt file whenever you add new sections to your website.

Understanding Robots.txt Generator Directives

These core commands control search engine behavior on your site.

User-agent

Specifies which search engine bot the rules apply to (use * for all bots).

Disallow

Blocks bots from crawling specific directories or pages.

Allow

Overrides disallow rules for specific content within blocked sections.

Sitemap

Tells search engines where to find your XML sitemap.

Conclusion

A properly configured robots.txt file is essential for guiding search engines to your most valuable content while protecting private areas of your website. A reliable robots.txt generator eliminates the technical complexity of creating this crucial file while ensuring compliance with search engine standards. Whether you’re managing a small blog or enterprise e-commerce site, controlling search engine access improves your SEO efficiency and protects your sensitive content.

Checkout Our new tool Reverse Text tool…

Ready to control search engine access? [Use our free Robots.txt Generator now] and create your custom robots.txt file in minutes!

Frequently Asked Questions (FAQs)

Is this robots.txt generator completely free?

Yes, 100% free with no usage limits, registration requirements, or hidden costs.

Will robots.txt completely hide my pages from search results?

No, robots.txt only controls crawling. To prevent indexing, use noindex meta tags or password protection.

How do I add the generated file to my website?

Upload the robots.txt file to your website’s root directory using FTP or your hosting control panel.

Can I block specific search engines?

Yes, you can create rules that apply only to specific bots like Googlebot or Bingbot.

Is this robots.txt generator completely free?

Yes, 100% free with no usage limits, registration requirements, or hidden costs.

Will robots.txt completely hide my pages from search results?

No, robots.txt only controls crawling. To prevent indexing, use noindex meta tags or password protection.

How do I add the generated file to my website?

Upload the robots.txt file to your website’s root directory using FTP or your hosting control panel.

Can I block specific search engines?

Yes, you can create rules that apply only to specific bots like Googlebot or Bingbot.